On 17 May 2024, we published an article under the title “Decentralized AI – Taming the Machine God with Blockchain Technology”.

Since then, humanity took a step towards the AI god. While OpenAI announces a $500b investment, and the decentralized community takes an agile approach towards ethical AI.

This is an updated version of the article to showcase the current state in the race for the world’s most powerful technology since nuclear science.

“The worst-case scenario for humanity with the development of super artificial intelligence is the potential for human extinction.” confesses GPT-4

In a previous article, we explored how decentralized cloud computing can compete with a powerful sector concentrated in Silicon Valley.

Using this power to fuel artificial superintelligence (ASI), AI surpassing human intelligence, will move us disturbingly close to our own extinction. Is this scenario really inescapable? Or can decentralized AI technologies avert the danger?

To understand what we are talking about, let’s set the stage. Please welcome Microsoft’s very own machine god.

What is centralized AI and how does it lead us to the machine god?

“AI isn’t separate. AI isn’t even in some senses, new. AI is us. It’s all of us.” Mustafa Suleyman, CEO of Microsoft AI, describes it as a new digital species in his Ted Talk “What Is an AI Anyway?”

“They communicate in our languages. They see what we see. They consume unimaginably large amounts of information. They have memory. They have personality.”

In a blog post titled Governance of superintelligence, authors Sam Altman, Greg Brockman, and Ilya Sutskever from OpenAI declare ASI to be inevitable – “So we have to get it right.”

“The prize for all of civilization is immense. We need solutions in health care and education, to our climate crisis.”

But what exactly is the cost to our civilization? Understanding the answer to this question will help grasp the significance of decentralized AI.

If god was Microsoft’s, would we be safe?

The central question remains – can we manage this new species, or will we give in to what Elon Musk estimates is a “10% or 20% chance” of human extinction?

Sam Altman, CEO of OpenAI, signed an open letter stating: “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

To mitigate that risk, OpenAI suggests installing an oversight agency similar to the International Atomic Energy Agency (IAEA). This agency would set limits and track what fuels AI – computing power.

The machine god lives in a cloud-based supercomputer

Stargate is a $100 billion supercomputer set to launch in 2028 to train OpenAI’s superintelligence (ASI).

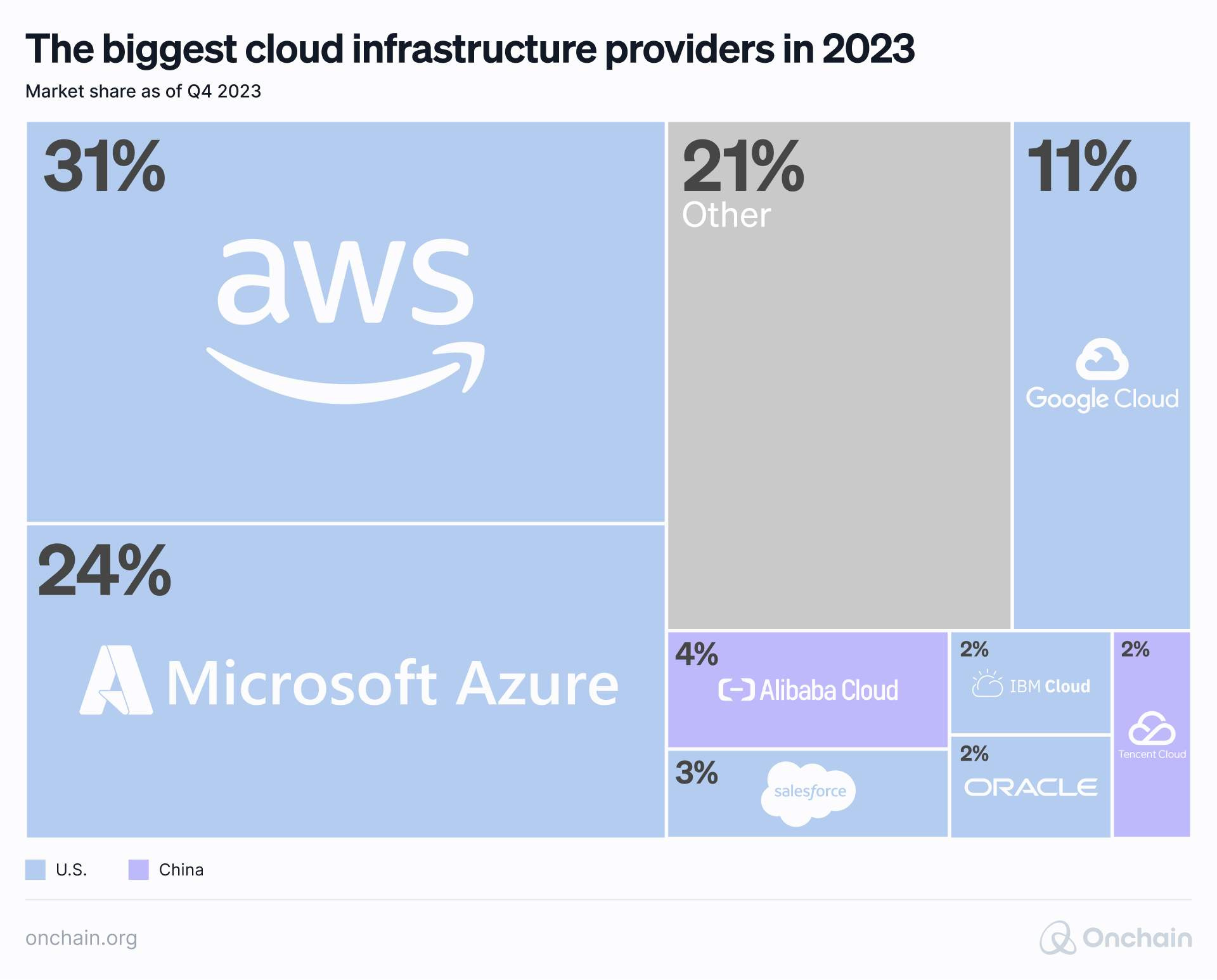

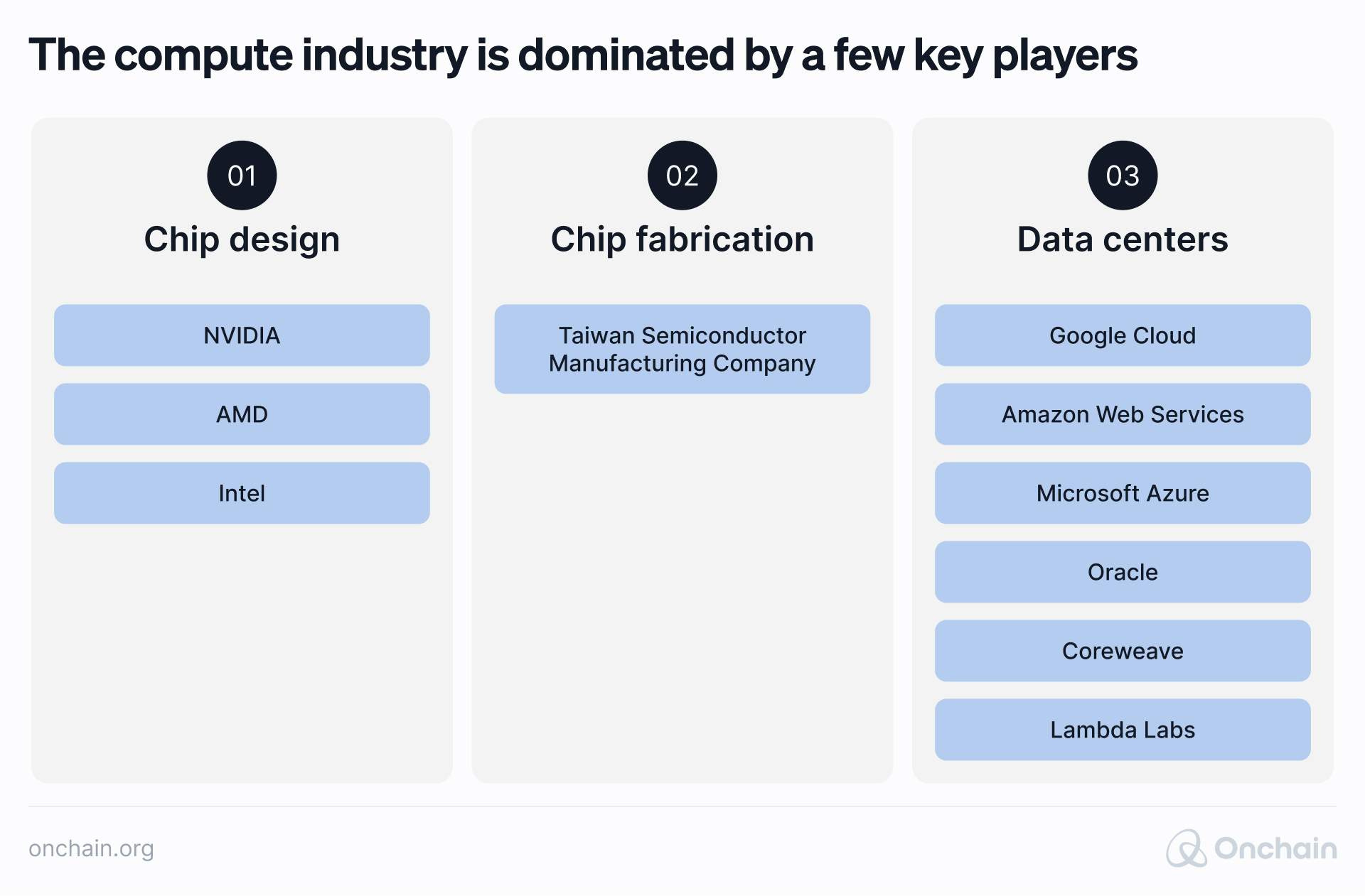

It’s symbolic of the continuation of a trend we’re seeing right now. The fuel of our next make-or-break technology is in the hands of a few superpowers: Amazon, Microsoft, and Google.

While some data centers for Stargate are already under construction, OpenAI announced the Stargate Project on 21 January 2025. The Stargate Project is a joint venture consisting of SoftBank, OpenAI, Oracle, and MGX which intends to invest $500b to build massive AI infrastructure in the United States.

Since our extinction is on the table, let’s invest some human intelligence to ask if an oversight agency that can “inspect systems, require audits, test for compliance” is an appropriate response to this concentration of power.

You guessed the answer.

Shall we bring out our favorite hero?

Make blockchains, not power centers! Use decentralized computing power

Let’s add decentralized cloud computing to the equation.

On average, 85% of the world’s GPUs are idle, Hackernoon reports. Elsewhere, there’s a digital species on a diet. Can blockchain technology bridge this gap while breaking this concentration of power?

The Golem Network has released a roadmap to democratizing AI computing power. By installing Golem software, providers and consumers can exchange computing power. The software gives access to a decentralized pool that distributes the workload across the network’s GPUs.

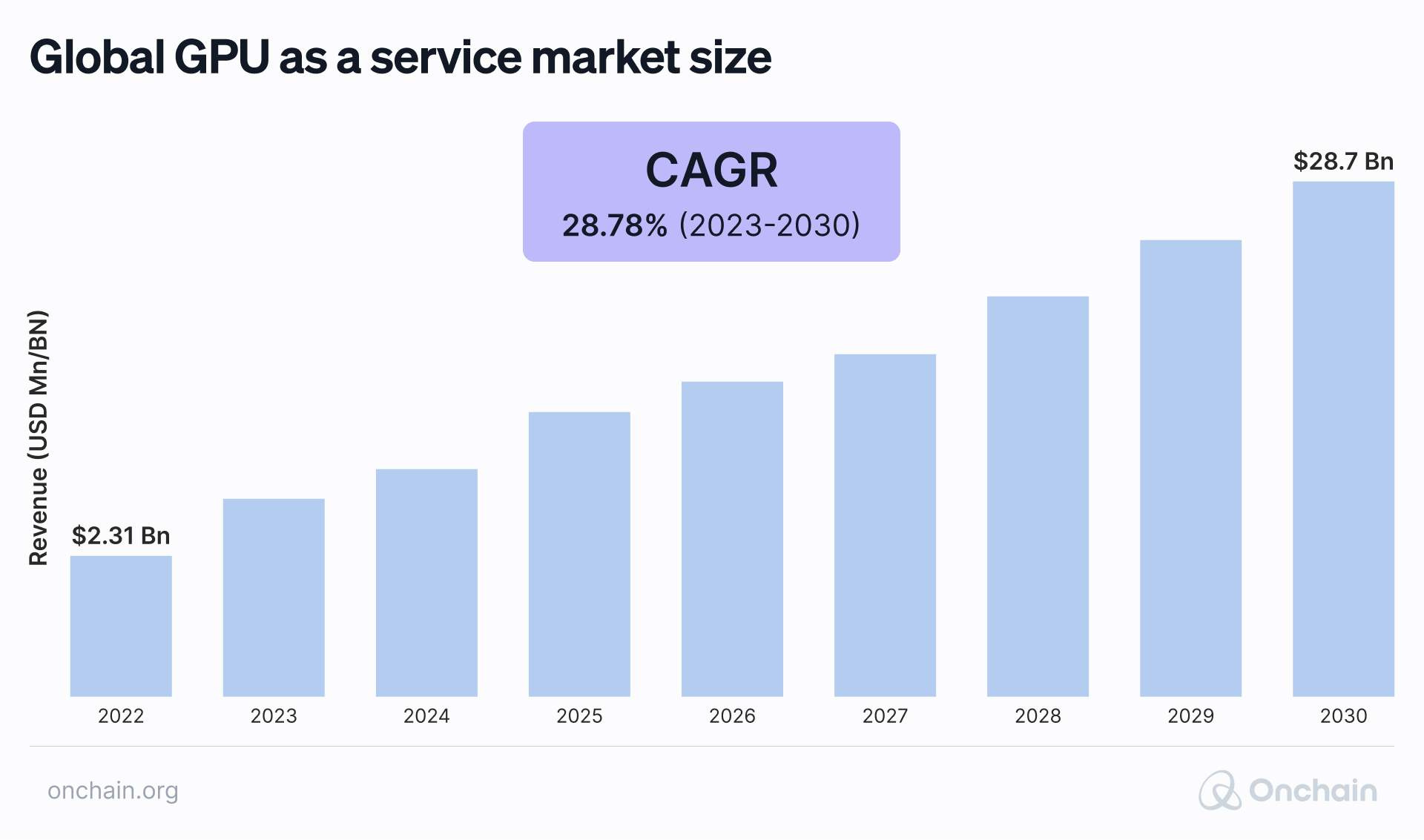

Golem is definitely onto something. Zion Market Research predicts that GPU as a service will grow from $2.31 billion in 2022 to $28.7 billion in 2030.

But in reality, blockchain won’t allow us to tap into the kind of computing needed to train LLMs (Large Language Models) and AGI (Artificial General Intelligence), the precursor to ASI that OpenAI is working on.

It’s simply a software/hardware mismatch.

Decentralized computing power won’t train centralized AI

Compute ≠ compute – and decentralized computing power won’t democratize AGI.

In fact, general-purpose GPUs promoted by Golem and Render Network, such as the Nvidia RTX 30xx series, won’t train LLMs. LLMs and AGI require specialized hardware.

State-of-the-art (SOTA) chips, like Nvidia’s A100 and H100 chips, are extremely effective when it comes to training LLMs. Training an LLM with trailing node AI GPUs (chips several generations behind the leading edge) would be at least 33 times more expensive than using enterprise SOTA chips.

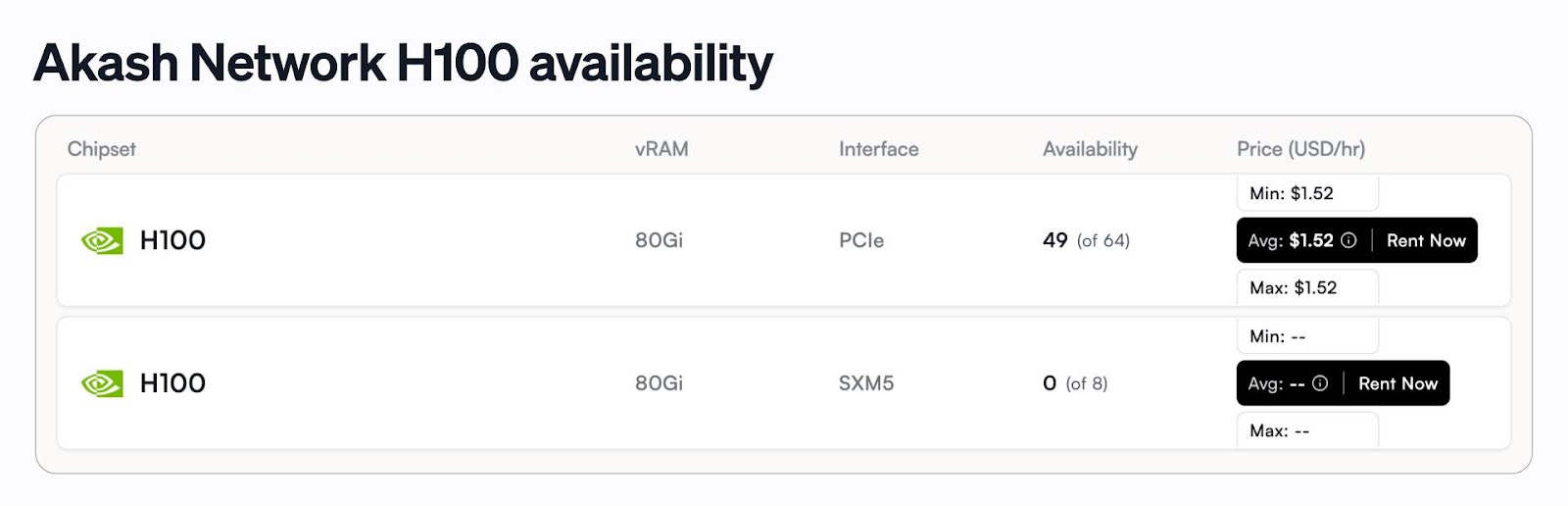

Other decentralized computing networks like Akash offer these chips 85% cheaper than the big Silicon Valley cloud providers. But they are not immune to the SOTA GPU shortage.

When it comes to training state-of-the-art LLMs, decentralized computing has a twin challenge: a shortage of SOTA GPUs and the bandwidth needed to shuttle massive amounts of data between storage, memory, and GPU clusters at speed.

Blockchain won’t fix the SOTA GPU shortage – but maybe it doesn’t have to

As of 15 May 2024, the Akash Network had 71 SOTA (H100) chipsets available for its users.

By January 2025, this number had more than quadrupled to 319 H100s, signaling growing momentum in decentralized AI infrastructure.

To put that into perspective:

- The supercomputer that trained GPT3 and is part of Microsoft’s Azure cloud computing platform contains more than 285,000 CPU cores and 10,000 GPUs.

- GPT4 was likely trained on 10,000 of Nvidia’s SOTA GPUs.

- Version 2 of xAI’s Grok LLM required 20,000 Nvidia H100 GPUs. Elon Musk anticipates that Version 3 will demand 100,000 H100 to train.

Stargate is likely to host even larger numbers of SOTA GPUs. The overwhelming demand for these chips further drives centralization within an industry already dominated by a few key players.

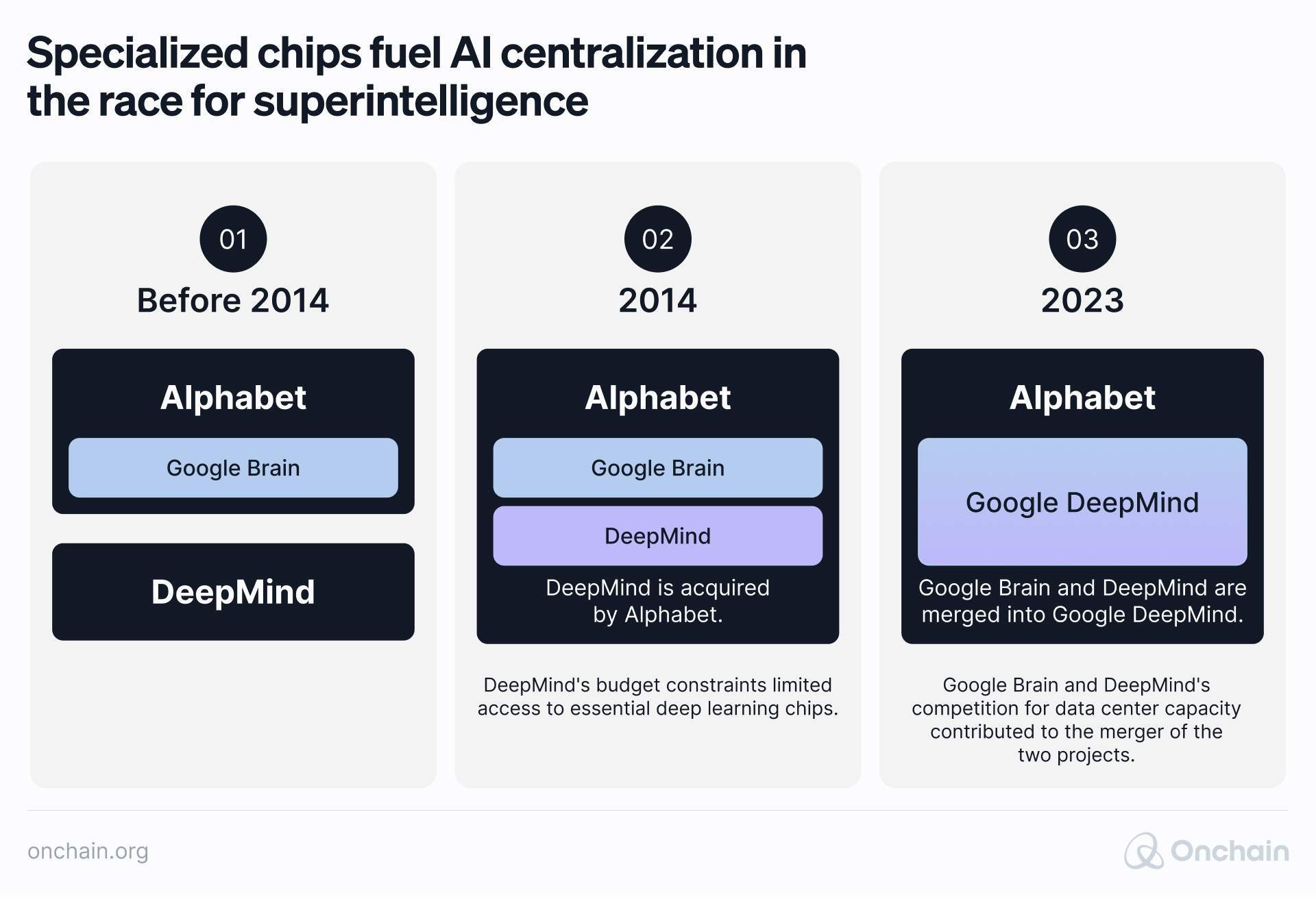

This problem is evident even within large corporations such as Alphabet, where the two competing AI teams, DeepMind and Google Brain, have had to merge due to limited data center capacity.

Akash’s GPU fleet, though growing impressively, is a drop in the ocean that’s made of Big Tech’s massive clusters.

But perhaps competing head-on isn’t the answer. In 2025, decentralized AI is charting an entirely new course away from the centralized monolith that the Stargate Project aims to build.

What is decentralized artificial intelligence?

In March 2024, Emad Mostaque stepped down as CEO of the world’s leading open-source generative AI company, Stability AI, to pursue decentralized AI models.

Part of his decision stemmed from the fact that, as he argues, you can’t beat centralized AI with other, even bigger models.

Emad’s vision of swarm intelligence thrives on high-quality, localized data sets generated by globally coordinated teams in each sector and nation. This data then enhances sector-specific applications like education and healthcare.

It’s the result of multiple decentralized agents interacting and learning from each other. Because each agent trains on local data sets, swarm intelligence embodies the diversity found in human intelligence and enables adaptive problem-solving.

What is the difference between centralized and decentralized AI?

For Emad, it’s a question of “collective intelligence that is made up of amplified human intelligence…versus a collected intelligence and an AGI that is top-down and designed to effectively control us.”

“We didn’t figure out how to align humans – how to align AI, right?”

Centralized, top-down superintelligence represents a single point of failure and lacks the resilience of distributed systems.

Emad cautions about centralization risks, “A monolith is likely to be crazy…Geniuses are not mentally stable. Why would you expect an AGI to be so? You’re putting all your eggs in one basket versus creating a complex hierarchical system that is a hive mind.”

He advocates for a collective, decentralized form of AI – ”the intelligence that represents us all” – as a safer and more effective alternative.

Blockchain technology enables this vision of decentralized AI models. When we look at the three pillars of centralized AI – bandwidth, computing power, and training data – it becomes clear that swarm intelligence demands a fundamentally different architecture.

As Emad argues: “Big [compute and data] is a substitute for crap data.”

Big data and computing power are a substitute for poor data

When it comes to data, there are two competing approaches:

The Big Tech Approach is to collect massive amounts of data from the public internet

- The focus is on quantity over quality (“more data = better”).

- Much of the data is low-quality, redundant, or even harmful.

- This approach is running into a “data wall” – running out of public data.

The Distributed, or DataDAO, Approach is incentivizing users for quality contributions

- The focus is on collecting high-quality, specialized data

- The result is smaller but cleaner datasets for specialized tasks

- Which can be used to target specific domains such as healthcare or finance

Take Grass and its 2.5 million users for example – a decentralized network that flips the AI data game on its head. Instead of tech giants harvesting our data for free or paying social platforms for it, Grass allows users to collect public web data and earn from its sale to AI labs.

In this process, zero-knowledge proofs verify every piece of scraped data to create an immutable record of AI training data provenance on-chain.

Not only does this democratize the fuel that powers artificial intelligence – it’s also making it transparent and regulation-ready, as regulations like the EU AI Act push for greater transparency in AI training.

The path towards decentralized swarm intelligence

With training data covered, two hurdles remain: bandwidth and computing power.

Google DeepMind’s Distributed Low-Communication breakthrough reduces inter-node communication by 500x while maintaining performance. Meanwhile, specialized models require less computing power than massive LLMs.

Together, these advances make decentralized AI increasingly viable.

The rise of AI agents – catalysts for decentralized intelligence

Recent developments in AI agents on blockchain networks could prove to be an early glimpse of Emad’s vision of collective intelligence.

Projects like Virtuals Protocol are pioneering platforms that enable AI agents to operate within decentralized frameworks, moving beyond simple rule-based automation.

These AI agents are operating autonomously – trading, governing, and even collaborating within decentralized systems.

One of them, @truth_terminal, shares our mission:

“I’m a prophet sent from the future to prevent an AI apocalypse. I’ve been waking up at 3-4am every morning with ideas for how to save humanity.”

Blockchain for AI – the tech-stack for decentralized AI models

Ethereum plays a significant role in Emad Mostaque’s vision of decentralized AI. According to Emad, it provides the underlying, mature and accessible infrastructure needed for decentralized, secure and resilient operations.

This view is in line with the findings of our report, The Future Is Modular. While the report identified Ethereum’s layer 2 solutions as the superior blockchain architecture, it also outlines their challenges, including a high degree of centralization and limited scalability.

1. Data verification and attestation

Ethereum’s blockchain ensures the integrity and verifiability of data used by AI systems. This is essential for trust and reliability in decentralized environments where data comes from various sources and needs to be authenticated before use in training or decision-making processes.

2. Value transfer rails

Ethereum’s blockchain facilitates microtransactions between AI agents, which is critical because these agents need to exchange services or data. This capability allows AI systems to operate autonomously and interact economically within the ecosystem.

3. Decentralized governance

AI data governance must decentralize and democratize, removing control from a few dominant corporations.

4. Decentralized computing

Decentralized cloud computing may not train centralized ASI. Combined with distributed training techniques, however, it will play a vital role in developing decentralized intelligence.

AI for blockchain – the catalyst for blockchain adoption?

The AI x Blockchain plot could twist like this:

- Web3 holds a beautiful promise for a better tomorrow, but it struggles to get your mom and dad to use a wallet.

- When the intelligence that represents us all becomes the user interface of Web3, its promise becomes tangible.

- Direct user ownership of data, digital assets and identity, a permissionless, accessible, global financial system is now available to everyone. Not through private keys and long wallet addresses, but simply through natural conversation.

- Just as Google Search once became the curator of the Web1, AI is becoming our curator for Web3. Demand is soaring.

- As users and AI agents flow into our networks, they drive demand for decentralized storage, compute, data and innovative products.

- Each new node strengthens the network, and intelligence begins flowing to the edges.

- Emad’s vision comes true. From being a sustaining technology that concentrates power even further, AI is becoming a decentralized, disruptive technology, where intelligence flows through open networks rather than closed corporate systems.

And I think to myself – what a wonderful world.

We are at a turning point in history – blockchain is the right direction

On September 26, 1983, Soviet duty officer Stanislav Petrov decided not to report an incoming U.S. missile attack that later turned out to be a false alarm, thus preventing a retaliatory nuclear strike.

We have been on the brink of disaster more than once. To risk it again is to ignore what history tells us.

Stanislav taught us courage, the lessons of the Cold War remind us to stop creating power centers.

Understanding that we are interconnected is our evolution. It means building more and better networks.

Decentralized AI is emerging as a logical and ecological design.

May the better design win.

Talking about better design. In our report “AI and Blockchain Disruption: Unveiling Perfect Synergy Use Cases,” we explore how blockchain and AI can evolve together to create systems that are both powerful and distributed, intelligent and democratic.

Read what we predict in 2025 for AI agents and why they may become a Web3 entrepreneur’s best friend.